Modeling Exercises (Solutions)

Contents

# If you are using Anconda, please re-download this file to current directory:

# https://raw.githubusercontent.com/shawnrhoads/gu-psyc-347/master/course-env.yml

# Then, run this cell

# !conda env update --file course-env.yml

Modeling Exercises (Solutions)¶

These exercises were inspired by and adapted from Neuromatch Academy [CC BY 4.0] and DartBrains [CC BY-SA 4.0 License].

We will continue to use data from O’Connell, et al. (2021) Reduced social distancing during the COVID-19 pandemic is associated with antisocial behaviors in an online United States sample. PLoS ONE.

This study used a linear model to assess whether social distancing behaviors (early in the COVID-19 pandemic) was associated with self-reported antisocial behavior. To measure one index of social distancing behavior, participants were presented with an image of an adult silhouette surrounded by a rectangular border. They were asked to click a point in the image that represents how far away they typically stood from other individuals.

Here is a heatmap showing how far participants reported standing from other individuals in the past week, with dark maroon indicating a higher density of responses obtained from a kernel density estimation. The mean response coordinate, +, represents a distance of approximately 98 inches (8.2 feet; 2.5 m).

For this exercise, we will reproduce the results from O’Connell et al. (2021) that distance kept from others in the past week can be expressed as a function of antisocial behavior, controlling for age, sex, education, household income, high-risk status, and PPE use frequency.

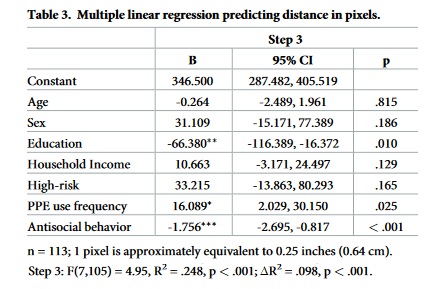

We will reproduce the beta coefficients from Table 3 in the paper.

Table of Contents¶

Key¶

# [INSERT CODE BELOW]: indicates where you should insert your own code, feel free to replace with a comment of your own...: indicates a location where you should insert your own coderaise NotImplementedError("Student exercise: *"): delete this line once you have added your code

Exploring the Data¶

# here we will import all of our packages for the exercise

from scipy.optimize import minimize # finding optimal params in models

from scipy import stats # statistical tools

import numpy as np # matrix/array functions

import pandas as pd # loading and manipulating data

import requests # downloading data from the internet

import ipywidgets as widgets # interactive display

import matplotlib.pyplot as plt # plotting

%matplotlib inline

# please load in the data into a pandas DataFrame,

# then print the first five rows to check that everything loaded correctly

# hint: we've done this in previous exercises

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: load OConnell_COVID_MTurk_noPII_post_peerreview.csv file as pandas dataframe, then delete this line")

#solution:

# please load in the data into a pandas DataFrame,

# then print the first five rows to check that everything loaded correctly

# hint: we've done this in previous exercises

url = 'https://raw.githubusercontent.com/shawnrhoads/gu-psyc-347/master/docs/static/data/OConnell_COVID_MTurk_noPII_post_peerreview.csv'

df = pd.read_csv(url)

display(df.head())

| subID | mturk_randID | suspect_itaysisso | Country | Region | ISP | loc_US | loc_state | loc_zipcode | loc_County | ... | education_4yr | STAB_total_centered | STAB_total_min32 | silhouette_dist_X_min81 | silhouette_dist_X_inches | violated_distancing | STAB_rulebreak_rmECONOMIC | STAB_total_rmECONOMIC | STAB_total_rmECONOMIC_centered | household_income_coded_centered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1001 | 8797 | 0 | United States | CT | AS7015 Comcast Cable Communications, LLC | Yes | Connecticut | 6511 | New Haven County | ... | 0 | -3.946565 | 19 | 441.0 | 110.332750 | 0 | 9 | 48 | -2.076336 | 1.269231 |

| 1 | 1002 | 3756 | 0 | United States | IL | AS7018 AT&T Services, Inc. | Yes | California | 90280 | Los Angeles County | ... | 0 | 39.053436 | 62 | 287.0 | 71.803856 | 1 | 24 | 88 | 37.923664 | -3.730769 |

| 2 | 1003 | 3798 | 0 | United States | OH | AS10796 Charter Communications Inc | Yes | Ohio | 44883 | Seneca County | ... | 0 | 40.053436 | 63 | 313.0 | 78.308731 | 0 | 23 | 85 | 34.923664 | -2.730769 |

| 3 | 1004 | 2965 | 0 | United States | TX | AS7018 AT&T Services, Inc. | Yes | Texas | 77019 | Harris County | ... | 1 | -9.946565 | 13 | 452.0 | 113.084820 | 0 | 8 | 42 | -8.076336 | NaN |

| 4 | 1005 | 5953 | 0 | United States | NC | AS20115 Charter Communications | Yes | North Carolina | 28334 | Sampson County | ... | 0 | -17.946566 | 5 | 297.0 | 74.305733 | 0 | 8 | 34 | -16.076336 | -2.730769 |

5 rows × 126 columns

Oh no! Our data contain NaNs (participants either did not answer the question, or did not meet inclusion criteria for certain variables). Let’s get rid of them using the code below:

var_names = ['age_centered',

'sex_1f',

'education_4yr',

'household_income_coded_centered',

'highrisk_self_or_livewith',

'ppe_freq_coded',

'STAB_total_centered',

'silhouette_dist_X_min81']

df.dropna(subset=var_names,

inplace=True)

Ordinary Least Squares¶

Now that we’ve loaded in our data, let’s explore their underlying distributions and then fit these data to a linear model by minimizing the mean squared error (MSE)–this is also known as ordinary least squares.

This model can be expressed mathematically as:

Our goal is to estimate the \(\beta\) parameters that explain the relationships in our model.

To accomplish this, we need to do the following:

Convert the dependent variable column (1) in the pandas DataFrame to a numpy array

ywith size(n_subjects, 1).Convert the independent variable columns (7) in the pandas DataFrame to a numpy array

Xwith size(n_subjects, 8), the first column should represent an intercept and contain ones.Define a function

ols()that takes inputsX(numpy array) andy(numpy array), and outputsbeta_hats(list or numpy array).Run the function and find the optimal parameter estimates that fit the model.

# get number of subjects and create X and y

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: initialize n_subjects, X and y, then delete this line")

n_subjects = len(...)

# store columns of interest in a single numpy array

# age_centered - age (continuous; 18-65)

# sex_1f - sex (0: male; 1: female)

# education_4yr - education (0: < 4-year degree; 1: >= 4-year degree)

# household_income_coded_centered - household income (continuous)

# highrisk_self_or_livewith - high-risk for serious illness for self or someone the participant lives with (0: no; 1: yes)

# ppe_freq_coded - PPE use frequency (continuous; 1: Never, 5: Always)

# stab_total_centered - antisocial behavior (continuous)

# silhouette_dist_x_min81 - distance kept from others in past week (continuous)

x0 = np.ones((n_subjects,))

x1 = ... # age

x2 = ... # sex

x3 = ... # edu

x4 = ... # income

x5 = ... # high-risk

x6_new = []

for ans in df['ppe_freq_coded']:

if ans == 'Never':

x6_new.append(1)

elif ans == 'Rarely':

x6_new.append(2)

elif ans == 'Sometimes':

x6_new.append(3)

elif ans == 'Often':

x6_new.append(4)

elif ans == 'Always':

x6_new.append(5)

x6 = np.array(x6_new) # ppe

x7 = ... # antisociality (stab)

X = np.stack((...), axis=1)

y = ... # distance from others

# solution:

# get number of subjects and create X and y

n_subjects = len(df)

# store columns of interest in a single numpy array

# age_centered - age (continuous; 18-65)

# sex_1f - sex (0: male; 1: female)

# education_4yr - education (0: < 4-year degree; 1: >= 4-year degree)

# household_income_coded_centered - household income (continuous)

# highrisk_self_or_livewith - high-risk for serious illness for self or someone the participant lives with (0: no; 1: yes)

# ppe_freq_coded_2 - PPE use frequency (continuous; 1: Never, 5: Always)

# stab_total_centered - antisocial behavior (continuous)

# silhouette_dist_x_min81 - distance kept from others in past week (continuous)

x0 = np.ones((n_subjects,))

x1 = df['age_centered'].values # age

x2 = df['sex_1f'].values # sex

x3 = df['education_4yr'].values # edu

x4 = df['household_income_coded_centered'].values # income

x5 = df['highrisk_self_or_livewith'].values # high-risk

x6_new = []

for ans in df['ppe_freq_coded']:

if ans == 'Never':

x6_new.append(1)

elif ans == 'Rarely':

x6_new.append(2)

elif ans == 'Sometimes':

x6_new.append(3)

elif ans == 'Often':

x6_new.append(4)

elif ans == 'Always':

x6_new.append(5)

x6 = np.array(x6_new) # ppe

x7 = df['STAB_total_centered'].values # antisociality (stab)

X = np.stack((x0, x1, x2, x3, x4, x5, x6, x7), axis=1)

y = df['silhouette_dist_X_min81'].values # distance from others

# using a for loop, please print the means and standard deviations of these variables

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: please print the means and standard deviations of these variables, then delete this line")

for name, data in zip(var_names, ...):

print(f'{name}: mean={np.mean(data):.2f}, sd={np.std(data):.2f}')

#solution:

# using a for loop, please print the means and standard deviations of these variables

for name, data in zip(var_names, [x1, x2, x3, x4, x5, x6, x7, y]):

print(f'{name}: mean={np.mean(data):.2f}, sd={np.std(data):.2f}')

age_centered: mean=0.38, sd=10.26

sex_1f: mean=0.42, sd=0.49

education_4yr: mean=0.54, sd=0.50

household_income_coded_centered: mean=0.00, sd=1.79

highrisk_self_or_livewith: mean=0.36, sd=0.48

ppe_freq_coded: mean=2.88, sd=1.62

STAB_total_centered: mean=-4.95, sd=25.17

silhouette_dist_X_min81: mean=390.70, sd=131.67

# define a function called ols() that computes betas for the model

# no need to edit anything here

# see module-02-00_Linear-Modeling for derivation

def ols(X, y):

beta_hat = np.linalg.inv(X.T @ X) @ X.T @ y

return beta_hat

# compute the betas

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: compute beta_hats using the function we just defined, then delete this line")

beta_hats = ols(...)

# solution:

# compute the betas

beta_hats = ols(X, y)

Now, that we have our fitted parameters, please print our 8 coefficients corresponding to our variables in a for loop below.

# print the names of each variable

x_vars = ['Constant', 'Age','Sex','Education',

'Household Income','High-risk','PPE Use',

'Antisocial behavior']

for variable, beta_value in zip(x_vars, ...):

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: complete the string below, then delete this line")

print(fr'coefficient for {...} = {...:.2f}')

#solution:

# print the names of each variable

x_vars = ['Constant', 'Age','Sex','Education',

'Household Income','High-risk','PPE Use',

'Antisocial behavior']

for variable, beta_value in zip(x_vars, beta_hats):

print(fr'coefficient for {variable} = {beta_value:.2f}')

coefficient for Constant = 346.50

coefficient for Age = -0.26

coefficient for Sex = 31.11

coefficient for Education = -66.38

coefficient for Household Income = 10.66

coefficient for High-risk = 33.21

coefficient for PPE Use = 16.09

coefficient for Antisocial behavior = -1.76

Awesome! We were able to recover the beta coefficients from the model in O’Connell et al. (2021) using least-squares minimization (which has an analytic solution).

Here is the table for comparison:

Maximum Likelihood Estimation¶

Above, we made the assumption that the data were drawn from a linear relationship with noise added, and found an effective approach for estimating model parameters based on minimizing the mean squared error.

We treated the noise as simply a nuisance, but what if we factored it directly into our model?

Recall our linear model:

The noise component \(\epsilon\) is often modeled as a random variable drawn from a Gaussian distribution (also called the normal distribution).

The Gaussian distribution is described by its probability density function (pdf):

and is dependent on two parameters: the mean \(\mu\) and the variance \(\sigma^2\). We often consider the noise signal to be Gaussian “white noise”, with zero mean and unit variance:

Before we begin, use the widget below to see how varying the \(\mu\) and \(\sigma\) parameters change the location and shape of samples.

# interactive display (if not using Jupyter Book)

%config InlineBackend.figure_format = 'retina'

@widgets.interact(mu=widgets.FloatSlider(0.0, min=-2.0, max=2.0),

sigma=widgets.FloatSlider(1.0, min=0.5, max=2.0))

def plot_normal_dist(mu=0, sigma=1):

# Generate pdf & samples from normal distribution with mu/sigma

rv = stats.norm(mu, sigma)

x = np.linspace(-5, 5, 100)

y = rv.pdf(x)

samples = rv.rvs(1000)

# Plot

fig, ax = plt.subplots()

ax.hist(samples, 20, density=True, color='g', histtype='stepfilled', alpha=0.8,

label='histogram')

ax.plot(x, y, color='orange', linewidth=3, label='pdf')

ax.vlines(mu, 0, rv.pdf(mu), color='red', linewidth=3, label='$\mu$')

ax.vlines([mu-sigma, mu+sigma], 0, rv.pdf([mu-sigma, mu+sigma]), colors='red',

color='b', linewidth=3, label='$\sigma$')

ax.set(xlabel='x', ylabel='probability density', xlim=[-5, 5], ylim=[0, 1.0])

ax.legend()

Now that we have a model of our noise component \(\epsilon\) as random variable, how do we incorporate this back into our original linear model from before? Consider again our simplified model \(y = \beta \cdot x + \epsilon\) where the noise has zero mean and unit variance \(\epsilon \sim \mathcal{N}(0, 1)\). We can now also treat \(y\) as a random variable drawn from a Gaussian distribution where \(\mu = \beta \cdot x\) and \(\sigma^2 = 1\):

which is to say that the probability of observing \(y\) given \(x\) and parameter \(\beta\) is

Now that we have our probabilistic model, we turn back to our original challenge of finding a good estimate for \(\beta\) that fits our data. Given the inherent uncertainty when dealing in probabilities, we talk about the likelihood that some estimate \(\hat \beta\) fits our data. The likelihood function \(\mathcal{L(\beta)}\) is equal to the probability density function parameterized by that \(\theta\):

Let’s implement the likelihood function \(\mathcal{L}(\beta|x,y)\) for our linear model where \(\sigma = 1\).

After implementing this function, we can produce probabilities that our estimate \(\hat{\beta}\) generated the provided observations.

def loglikelihood(beta_hat, X, y):

"""The likelihood function for a linear model with noise sampled from a Gaussian distribution with zero mean and unit variance.

Args:

beta_hat (float): Estimates of the slope parameters.

x (ndarray): An array of shape (samples,) that contains the input values.

y (ndarray): An array of shape (samples,) that contains the corresponding measurement values to the inputs.

Returns:

ndarray: the likelihood values for the beta_hat estimates

"""

# compute the predicted y based on inputted beta_hats

# here, dot product is equivalent to the weighted sum of values

# (i.e., b0 + b1*x1 + b2*x2 + .. bd*xd)

predicted_y = np.dot(X, beta_hat)

# Compute Gaussian likelihood

pdf = stats.norm.logpdf(y, loc=predicted_y)

return pdf

Finding the Maximum Likelihood Estimator¶

We want to find the parameter value \(\hat\beta\) that makes our data set most likely:

We discussed how taking the logarithm of the likelihood helps with numerical stability, the good thing is that it does so without changing the parameter value that maximizes the likelihood. Indeed, the \(\textrm{log}()\) function is monotonically increasing, which means that it preserves the order of its inputs. So we have:

Now substituting our specific likelihood function and taking its logarithm, we get: $\( \hat{\beta}_{\textrm{MLE}} = \underset{\beta}{\operatorname{argmax}} [-\frac{N}{2} \operatorname{log} 2\pi\sigma^2 - \frac{1}{2\sigma^2}\sum_{i=1}^N (y_i-\beta \cdot x_i)^2]. \)$

Note that maximizing the log likelihood is the same as minimizing the negative log likelihood (in practice optimization routines are developed to solve minimization not maximization problems). Because of the convexity of this objective function, we can take the derivative of our negative log likelihhood, set it to 0, and solve - just like our solution to minimizing MSE.

Note that this looks remarkably like the equation we had to solve for the optimal MSE estimator, and, in fact, we arrive to the exact same solution!

or

Even though we already know the analytic solution to solve for our \(\hat{\beta}\) coefficients, let’s continue on to solve for them bu minimizing the negative loglikelihood.

# please compute the negative loglikelihood in this new function

# note: this function calls the likelihood() function we defined earlier

# hint: use the function: np.sum()

def minimize_negll(params, X, y):

"""The likelihood function for a model with noise sampled from a

Gaussian distribution with zero mean and unit variance.

Args:

params (list)

X (ndarray): An array of shape (samples,) that contains the input values.

y (ndarray): An array of shape (samples,) that contains the corresponding

measurement values to the inputs.

Returns:

scalar: the neg sum of the loglikelihoods for the beta_hat estimate

"""

# Compute Gaussian loglikelihood

loglik = loglikelihood(params, X, y)

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: compute the negative sum of the loglikelihoods computed above, then delete this line")

negLL = ...

return negLL

#solution:

# please compute the negative loglikelihood in this new function

# note: this function calls the likelihood() function we defined earlier

def minimize_negll(params, X, y):

"""The likelihood function for a model with noise sampled from a

Gaussian distribution with zero mean and unit variance.

Args:

params (list)

x (ndarray): An array of shape (samples,) that contains the input values.

y (ndarray): An array of shape (samples,) that contains the corresponding

measurement values to the inputs.

Returns:

scalar: the neg sum of the loglikelihoods for the beta_hat estimate

"""

# Compute Gaussian loglikelihood

loglik = loglikelihood(params, X, y)

negLL = -np.sum(loglik)

return negLL

# specify starting points

init_x = (1,1,1,1,1,1,1,1) # can be anything

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: complete the code below to find the optimal params, then delete this line")

# minimize MSE for linear function using scipy.optimize.minimize

mle_results = minimize(..., # objective function

init_x, # estimated starting points

args=(..., ...)) # arguments

# specify starting points

init_x = (350, -.2, 52, -60, 10, 30, 25, -1)

# minimize MSE for linear function using scipy.optimize.minimize

mle_results = minimize(minimize_negll, # objective function

init_x, # estimated starting points

args=(X, y), # arguments

method = 'Nelder-Mead')

Now, that we have our fitted parameters (now using MLE), please print our 8 coefficients corresponding to our variables in a for loop below.

# print the names of each variable

x_vars = ['Constant', 'Age','Sex','Education',

'Household Income','High-risk','PPE Use',

'Antisocial behavior']

# [INSERT CODE BELOW]

raise NotImplementedError("Student exercise: complete the code below, then delete this line")

# think about where you stored the results of the minimization

mle_betas = ...

for variable, beta_value in zip(x_vars, mle_betas):

print(fr'coefficient for {...} = {...:.2f}')

#solution:

# print the names of each variable

x_vars = ['Constant', 'Age','Sex','Education',

'Household Income','High-risk','PPE Use',

'Antisocial behavior']

mle_betas = mle_results.x

for variable, beta_value in zip(x_vars, mle_betas):

print(fr'coefficient for {variable} = {beta_value:.2f}')

coefficient for Constant = 346.50

coefficient for Age = -0.26

coefficient for Sex = 31.11

coefficient for Education = -66.38

coefficient for Household Income = 10.66

coefficient for High-risk = 33.22

coefficient for PPE Use = 16.09

coefficient for Antisocial behavior = -1.76

Wow, how cool is that?! We can recover the same parameters by minimizing the loglikelihood as we did earlier using least squares minimization.

Bonus: Explained Variance¶

Sometimes we want a single metric to quantify overall how well our model was able to explain variance in the data. There are many metrics that can provide a quantitative measure of goodness of fit.

Here we will calculate \(R^2\) using the following formula:

where \(y_i\) is the measured value of the voxel at timepoint \(i\), \(\hat y_i\) is the predicted value for time point \(i\), and \(\bar y\) is the mean of the measured voxel timeseries.

\(R^2\) will lie on the interval between \([0,1]\) and can be interpreted as percentage of the total variance in \(Y\) explained by the model, \(X\), where 1 is 100% and 0 is none.

\(R^2\) is also used to compare model fits, similar to how we \(BIC\) used in the nonlinear modeling tutorial (see Table 3 in paper, which assessed the difference in \(R^2\) across models to select the best-fitting model).

def r_squared(y, predicted_y):

SS_total = np.sum((y - np.mean(y))**2)

SS_residual = np.sum((y - predicted_y)**2)

return 1-(SS_residual/SS_total)

predicted_y = np.dot(X, mle_results.x)

print(f"R^2: {r_squared(y, predicted_y):.3f}")

R^2: 0.248